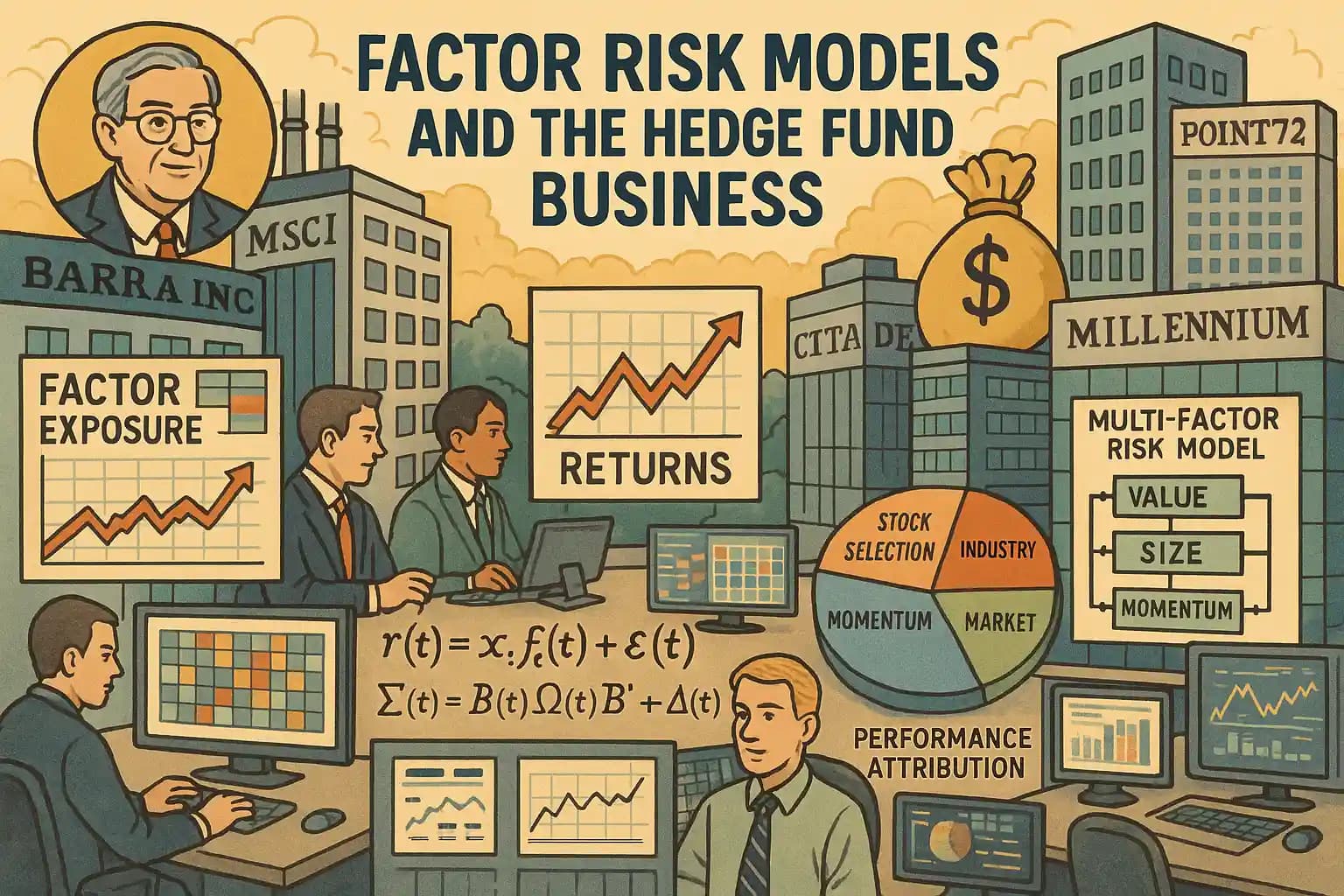

The Birth of an Industry Standard

In the mid-1970s, a Berkeley economics professor named Barr Rosenberg fundamentally changed how institutional investors think about risk. Rosenberg founded Barra Inc. in 1975, introducing the concept of multi-factor risk models that would become the industry standard for portfolio risk management. His core insight was that stocks with similar fundamental characteristics tend to move together, and these common movements can be systematically measured and predicted. This might sound simplistic and pointless, but if you really follow the chain of logic all the way and figure out the math, it turns into a fabulously useful tool for understanding risk and performance.

The company's first model, the USE1 (US Equity Model 1), launched in 1975, followed by USE2 in 1985 and USE3 in 1997. These models gained traction through the 1980s and early 1990s, initially among quantitative institutional investors and asset managers. By the late 1990s, as hedge funds exploded from managing under $100 billion to becoming a trillion-dollar industry, Barra models had become essential infrastructure. The 2000s saw near-universal adoption among sophisticated funds, particularly after Morgan Stanley acquired Barra for $816 million in 2004 and merged it with MSCI (Morgan Stanley Capital International) to create MSCI Barra.

Today, MSCI commands a market capitalization of approximately $44 billion, with total annual revenues of nearly $2.9 billion in 2024. The Analytics segment, which houses the risk models business including the Barra suite, generated $675 million in revenue for 2024 at an adjusted EBITDA margin of nearly 50%. So the risk models business is insanely profitable, which isn't surprising considering that it is absolutely critical infrastructure for portfolio construction and risk management across the global investment industry.

Today, MSCI's Barra models are accessed by over 1,200 financial institutions worldwide. Major hedge funds and asset managers pay substantial licensing fees for this critical infrastructure; enterprise-wide licenses for large multi-strategy platforms can exceed $1 million annually when factoring in multiple models, data feeds, and analytics platforms. For firms like Millennium, Citadel, Point72, and Balyasny, these costs are trivial compared to the risk management capabilities they provide. The models have evolved from an academic curiosity to table stakes for any serious institutional investor.

The Core Problem: Where Do Returns Actually Come From?

Imagine you have your own pod at one of the pod-shops. To make it concrete, suppose you're running a $500 million long/short equity book at Millennium. Your portfolio is up 12% year-to-date, crushing your benchmark. The risk team sends you a report showing that 8% of your return came from being accidentally overweight momentum stocks during a momentum rally, 3% came from your intentional stock picks, and 1% from random noise. Suddenly, your "alpha" doesn't look so impressive!

This is the daily reality at multi-strategy platforms. Every morning, portfolio managers wake up to factor exposure reports showing exactly what risks they're taking, whether intentional or not. The platform's risk system has already calculated whether their book is within limits on market beta, sector tilts, and style factors. If they're over the line on any dimension, they need to hedge before they can put on new trades.

When you buy a stock, you're not just buying that specific company; you're buying a bundle of characteristics. You're buying its size, its leverage, its momentum, its volatility profile, its sector exposure, and dozens of other attributes. Each of these attributes carries its own risk and return profile that can dominate your performance if you're not careful.

Beyond helping to understand the risk profile of a stock or a portfolio, factor models are also incredibly useful for helping to disentangle investing skill from "luck"; that is, has an investment manager achieved their returns and performance through a rigorous, repeatable approach that is driven by true, measurable alpha? Or how much of that performance came from "luck" (i.e., being long or short the market at the right time; having a huge factor imbalance that happened to work out very well over the time period in question; etc.).

The Mathematical Architecture: How Factor Models Actually Work

The genius of factor models lies in solving an impossible problem through elegant simplification. Consider what portfolio managers face: with thousands of stocks in play, they'd theoretically need to estimate millions of correlations between every possible pair. Factor models slash through this complexity with a powerful insight: most stocks move together because they share common characteristics, not because of unique pairwise relationships.

The math starts with decomposing each stock's return into what matters and what's noise. For any stock i at time t, the return breaks down as:

ri,t=xi,t⊤ft+εi,t

You don't really need an equation to understand this, though: it's just a decomposition of the returns, breaking it down into buckets like a forensic accountant might to a line item in a financial statement. The xi,t captures how exposed stock i is to various factors (how much of a growth stock is Apple? How sensitive is JPMorgan to interest rates?). The ft represents what those factors actually returned that day. The leftover εi,t is the idiosyncratic piece, or what happened to that specific stock that had nothing to do with broader patterns. Every basis point gets assigned to exactly one source, with no double-counting or gaps.

But attribution is just the warm-up act. The real value comes from using this structure to forecast risk. The model extends the decomposition to the entire covariance matrix:

Σt=BtΩtBt⊤+Δt

This formula encodes a crucial assumption that makes the whole enterprise tractable. The matrix Bt contains all stocks' factor exposures, Ωt captures how the factors themselves correlate, and Δt holds the stock-specific variances. The model assumes stocks only correlate through their shared factor exposures; that is, once you control for the fact that Microsoft and Apple are both large-cap tech stocks with similar growth profiles, their remaining risks should be independent. It's not perfectly true, but it's true enough to compress an intractable problem into something a computer can actually solve.

The daily machinery that keeps this running is surprisingly straightforward. Each day, the system estimates factor returns using weighted least squares regression across the entire stock universe. The weights, which are typically proportional to the square root of market cap, thread a careful needle. Pure market-cap weighting would let Apple and Microsoft dominate everything; equal weighting would let penny stocks add statistical noise. The square-root compromise keeps mega-caps from monopolizing the model while preventing micro-caps from distorting it. This regression runs fresh every trading day, allowing the model to adapt as relationships shift; this is important, since what drives markets during a Fed tightening cycle differs vastly from what matters during a tech boom.

Life Inside a Pod: The Daily Workflow

Let me walk you through a typical morning for a pod PM (portfolio manager) at a place like Citadel or Millennium. You arrive at 6:30 AM and immediately pull up your risk dashboard. The overnight risk batch has already run, calculating your exposures to every factor in the model. The first thing you check: are you within limits?

The platform has given you strict boundaries. Your market beta must stay between -5% and +5%. Your exposure to any single style factor can't exceed 0.5 standard deviations. Industry tilts are capped at 3% net. If you're outside any limit, you can't trade new positions until you hedge back to compliance. Some shops are stricter than others (Citadel is the toughest of all, demanding tight bounds on every factor tilt).

Your screen shows a heat map of factor exposures. You're running 0.3 standard deviations long momentum, -0.2 on value, essentially flat on size. The system also shows how these exposures have drifted over the past week. That momentum exposure crept up because several of your longs have been running and now screen as high momentum names. You need to decide: is this momentum exposure intentional, or should you hedge it out?

The pre-trade optimizer is your next stop. You've identified five new longs and three new shorts you want to put on today. You feed them into the optimizer along with your current book. The system solves a constrained optimization problem, telling you the exact sizes that maximize your expected alpha while staying within risk limits. It might tell you to trade 70% of your intended size on the longs and 120% on the shorts to maintain market neutrality.

But here's where it gets interesting. The optimizer also suggests a hedge basket: short $2 million of the momentum ETF, buy $1.5 million of the value ETF, and short specific sector ETFs to neutralize your unintended industry tilts. The system has solved a least-squares problem to find the minimum-cost hedge that brings you back to neutral on all constrained factors.

The Multi-Strategy Ecosystem: How Platforms Use Factor Models

At a multi-strategy platform, factor models aren't just risk management tools; they're like the "central nervous system" of the entire operation. The platform might have 50 pods, each running $200 million to $2 billion. Without factor models, the risk team would have no idea if all 50 pods were making the same bet. And this is really critical for the "pod shops" because the dirty secret of this corner of the hedge fund world is that it's only possible because of the power of leverage. These firms borrow between 5 to 7 times their LP ("Limited Partner," i.e., the institutions and high net worth individuals that invest in the hedge fund itself) capital from Wall Street banks and use this to finance the gross positions of their pods.

This leverage is what allows the pod-shop firms to charge the LPs 20% of the profits while also giving ~20% of the profits to each pod's PM. But leverage cuts both ways, and the arithmetic is brutal. Consider a pod running $1 billion gross with 6x leverage (so $167 million of actual LP capital). If that pod loses 5%, which is just $50 million in gross terms, it represents a 30% loss on the underlying LP capital. Enough pods doing this simultaneously could crater the entire fund's performance for the month.

That's why these firms have such a reputation for cutting risk quickly when a pod experiences a draw-down. They are infamous for the "shoulder tap" when you have a sudden draw-down (note that these are measured from your peak, so you can get your risk budget slashed even if you're still up for the year, just because you lost a lot quickly), leading to your capital account to get cut in half overnight. Then if you keep losing money, you might get cut in half again. Keep it up, and you might find yourself out of a job! Well, now you can understand why.

Now, getting back to how these firms use the risk models for getting a "360 view" of the firm's risk, here's how it works in practice: every pod's positions flow into a central risk system that calculates factor exposures in real-time. The platform risk manager sees a consolidated view: the firm is running $500 million net long momentum, $300 million short value, $200 million long small-cap. They can drill down to see which pods contribute to each exposure.

When a factor starts moving against the firm, the risk team springs into action. Say momentum suddenly crashes 3% in a day, costing the firm $15 million. The system immediately identifies which pods are exposed. Some might be intentionally long momentum as part of their strategy; they're allowed to keep their positions. Others might have drifted into momentum accidentally; they get a call to hedge immediately.

The factor model also drives capital allocation. Pods that generate consistent idiosyncratic returns (high information ratio, which is the holy grail metric that measures your excess returns per unit of risk taken, where anything above 0.5 is decent and above 1.0 is excellent, on stock-specific PnL) get more capital. Those whose returns are mostly explained by factor timing get scrutinized. Are they really adding value, or are they just levered beta trades that anyone could implement with ETFs?

Performance evaluation happens through the factor lens too. At month-end, each pod gets an attribution report breaking down their returns. A typical report might show: Market: +0.5%, Industries: -0.3%, Momentum: +2.1%, Value: -0.8%, Size: +0.2%, Stock Selection: +3.2%. The platform wants to see the bulk of returns coming from stock selection, not factor bets.

The Factors Decoded: A Practitioner's Guide

Let me take you through each factor as it's actually implemented and used on a trading desk, not just as an academic concept. When you see "ZS" in a factor name on your risk screen, that means z-score; the factor has been standardized cross-sectionally to have mean zero and unit standard deviation.

Style Factors: The Building Blocks

Residual Volatility (RESVOL) measures how much a stock bounces around after removing market effects. Every morning, the model calculates each stock's volatility beyond what beta (its sensitivity to overall market moves) would predict. To build this factor, we first run rolling regressions (sliding-window calculations that update as new data comes in) to estimate beta, then compute the standard deviation of the residuals (the leftover movements that beta can't explain). But that's just the start. We also incorporate high-low price ranges (which capture intraday volatility) and GARCH estimates (a statistical method that recognizes volatility tends to cluster, with calm periods followed by calm periods, while chaos breeds more chaos).

The construction is pretty intricate. After computing these volatility descriptors, each gets "winsorized" (i.e., the most extreme values at each end get clipped off) at roughly 3 standard deviations to prevent outliers from breaking the model. Then they're z-scored cross-sectionally and combined with weights like 75% for residual standard deviation, 15% for range-based measures, and 10% for GARCH. These weights aren't arbitrary: residual SD dominates because it's the most stable measure over 60-90 day windows; range adds critical intraday gap risk that closing prices miss entirely (think biotech trial results); GARCH gets minimal weight because while it captures volatility clustering, over-weighting it would make your risk model whipsaw with every vol spike. The final factor is orthogonalized to beta so that we don't double-count.

So, why does this matter for your pod? High residual volatility exposure means you're loaded up on stocks that could blow up from company-specific news. The platform watches this carefully because idiosyncratic blow-ups (stock-specific disasters unrelated to broader market moves) are the hardest to hedge and can trigger stop-outs (forced liquidations when losses hit predetermined limits).

Earnings Yield (EARNYILD) captures classic value through the earnings-to-price lens, but with sophistication that matters in practice. The model combines forward earnings yield (about 75% weight) with cash earnings yield (15%) and trailing earnings yield (10%). The forward-looking component dominates because markets are forward-looking.

The devil is in the implementation details. Negative earnings create havoc; you can't just compute E/P when E is negative. The model uses various transformations, like ranking within positive-earnings subsets or using sector-relative measures. Every input is winsorized and z-scored before combination. For a pod, this factor reveals whether your "fundamental" stock picks are really just a value bet in disguise.

Growth (GROWTH) sits on the opposite side of the style spectrum from value. The model combines analyst estimates of forward earnings growth (70% weight) with historical 5-year earnings and sales growth rates. Base effects are a nightmare here; growth rates explode when calculated from near-zero bases. The model handles this through careful winsorization and sometimes exclusion of problematic observations.

The growth factor helps platforms understand whether a pod's technology picks are genuine innovation plays or just riding the growth factor. During growth versus value rotations, which can be violent, knowing your exposure is the difference between surviving and getting stopped out.

Size (SIZE) might seem simple, just the log of market cap, z-scored. But size permeates everything in equity markets. It correlates with liquidity (how easily you can trade without moving the price), analyst coverage, index membership, and dozens of other characteristics. The log transformation is crucial because market cap distributions are so skewed (Apple at $3 trillion versus a small-cap at $500 million creates a 6,000x difference that would mathematically overwhelm everything else); without it, a few mega-caps would define the entire factor. The log nicely solves this by turning that 6,000x difference into a manageable ~8x difference (since log($3 trillion) ≈ 28.7 and log($500 million) ≈ 20.0), while still preserving the ordering, so that bigger companies remain bigger, just on a scale that doesn't break the math.

For pods, size exposure often creeps in accidentally. You find amazing ideas in mid-cap industrials, build concentrated positions, and suddenly you're massively long the size factor without meaning to be. The platform's risk system flags this before it becomes a problem.

Leverage (LEVERAGE) combines market leverage (debt relative to market cap, 75% weight), book leverage, and debt-to-assets ratios. The market-based measure gets the highest weight because market values are forward-looking while book values are backward-looking. Implementation requires careful handling of negative book equity and different accounting standards.

During credit stress, leverage becomes toxic. The March 2020 COVID crash saw leveraged companies down 40% while unleveraged ones fell just 20%. Pods need to know their leverage exposure to size positions appropriately and avoid catastrophic drawdowns.

Momentum: The Persistence of Winners and Losers

Short-term Momentum (MOM3WKZS) captures very recent price trends over 15 trading days. The calculation uses cumulative log returns (proper compounding) ending one or two days ago to avoid microstructure reversal effects. After computing returns, the cross-sectional z-score standardizes across the universe.

This factor picks up news momentum and positioning flows that persist for a few weeks. For a pod, short-term momentum exposure often builds up accidentally when your winners run. The risk system alerts you when this exposure gets large enough to dominate your PnL if momentum reverses.

Medium-term Momentum (MOM11MNZS) is the classic academic momentum factor, measuring returns from 12 months ago to 1 month ago. That one-month skip is absolutely critical; including the most recent month destroys the factor's efficacy because short-term reversal effects dominate.

Momentum is perhaps the most studied factor after value, and for good reason. It's been profitable across markets and centuries, but it's also prone to violent crashes. The March 2009 momentum crash saw the factor down 30% in two weeks as beaten-down financials rocketed higher. Pods running momentum strategies need explicit crash hedges. Over the past few years (with some notable exceptions over shorter sub-periods), momentum has been very good, since winners like NVDA and MSFT just keep going higher and higher.

Market Microstructure: Liquidity and Trading

Liquidity (LIQUIDTY) measures how easily you can trade a stock without moving the market. The model combines share turnover at multiple horizons (1-month at 35% weight, 3-month at 35%, 12-month at 30%). Turnover is volume divided by shares outstanding, carefully adjusted for ADRs and dual listings that can inflate apparent liquidity.

For pods, liquidity exposure determines your capacity and trading costs. The platform often scales position limits by liquidity; you can run bigger in liquid names. During de-risking events, liquidity exposure predicts which positions you can exit quickly versus which will trap you. You want to avoid getting into the stock market equivalent of a "roach motel" (from the old bug spray commercial, which explained how "Roaches check in, but they don't check out!"), which becomes more like a burning movie theater in a market meltdown.

Ownership Structure: Following the Crowd

Hedge Fund Ownership (HFOWN) tracks what percentage of shares are held by hedge funds, calculated from quarterly 13F filings, which are the mandatory SEC reports where any institution managing over $100 million must disclose their long US equity positions. This data is delayed (45 days after quarter-end) and incomplete (US long positions over $100 million only), but still provides valuable crowding information.

High hedge fund ownership creates fascinating dynamics. These stocks often momentum strongly as funds pile in, but crash violently when funds rush for the exit. The August 2007 quant crisis saw popular quant stocks down 20% in three days as funds deleveraged simultaneously. Smart pods monitor this factor to avoid getting caught in the stampede. Going back to the hotel analogy, these stocks that are "over-owned" by hedge funds are often known as "hedge fund hotels."

Passive Ownership (PASSOWN) measures index fund and ETF ownership. As passive ownership has grown to nearly 50% of market cap, this factor has become crucial. High passive ownership predicts lower volatility, higher correlation with indices, and predictable flows around index rebalances. These stocks that almost no hedge funds own are usually pretty boring an unremarkable, the "NPCs" of the stock market if you will.

Non-Linear Effects: When Relationships Curve

Non-Linear Beta (BETANL) captures the fact that high-beta stocks behave differently in extreme markets than their linear beta suggests. A stock might have 1.2 beta in normal markets but act like 2.0 beta in a crash. The model applies polynomial or spline transformations (mathematical functions that create curves instead of straight lines) to beta, then orthogonalizes (removes any overlap) to regular beta to isolate just the curvature, which you can think of as the part where reality bends away from the straight-line relationship.

If regular beta says "this stock moves 20% more than the market," non-linear beta adds "...but when markets tank 5% or more, it actually moves 100% more than the market." It's the difference between assuming a car always accelerates at the same rate versus recognizing it might have a turbo that kicks in at high speeds.

Non-Linear Size (SIZENL) reflects the mid-cap effect, which is the surprising tendency for medium-sized companies to sometimes trounce both giants and minnows. After orthogonalizing to linear size (stripping out the simple 'bigger is different than smaller' relationship to isolate just the curved, non-linear effects), this factor captures how mid-caps sometimes hit a sweet spot: large enough to have resources and credibility, small enough to be nimble and grow rapidly. This makes sense when you consider that hedge funds need a certain amount of liquidity in a name to even consider getting a decent-sized position in it (for example, anything that trades less than $15 million per day is probably too small for anything but a token position for most bigger pods), but at the same time, once the market cap gets too big, it takes so much incremental money flowing in to move the needle that you tend not to see huge moves. But a $5 billion market cap company could easily go up by 30% in a day if it has a big enough positive surprise, while moves of that magnitude for very large cap companies are extremely rare (although they do happen, as with Oracle when it announced its recent forecasts for AI datacenter revenues increasing 8x over the next couple of years).

The implementation uses carefully chosen spline knots (specific breakpoints where the curve changes direction) to center the effect on true mid-caps. Without this precision, you'd either lump mid-caps with small-caps (missing their unique behavior) or with large-caps (diluting the effect). The math essentially asks: "After accounting for the straight-line relationship between size and returns, is there a 'Goldilocks zone' where being not-too-big and not-too-small provides an edge?"

From Theory to Practice: Attribution and Performance Measurement

Here's where factor models prove their worth: explaining what actually happened. Every day, the system runs attribution that breaks your PnL into pieces. Say you made $2 million yesterday. The attribution might show: Market: $100k, Momentum: 500k,Value:−200k, Industries: $300k, Stock Selection: $1.3 million (this would be a really great outcome actually! It's depressing how much of what you might hope is alpha really turns out to be beta; smaller single-manager hedge funds that don't have access to these kinds of factor models probably go around for years thinking their beta and factor tilts are a result of their stock picking prowess, while Millennium would see through them in a second and take a hard pass!).

This daily attribution builds into monthly and quarterly reports that determine your fate. Platforms want to see high "specific IR" (information ratio on stock-specific returns; basically, your risk-adjusted returns, calculated as excess return divided by tracking error, where a ratio above 1.0 means you're generating more return than the risk you're taking) and low factor timing IR. If most of your profits come from factor timing, you're not adding value beyond what could be achieved with ETFs. Why? Because skill-based profits, if you can maintain them for years, are indicative of superior knowledge and analytical skills and market understanding, and thus more repeatable and underwritable.

The attribution also reveals hidden patterns. Maybe you consistently make money on Tuesdays (when certain factors tend to mean-revert) but lose on Fridays (when momentum extends). Maybe your stock selection is fantastic in technology but terrible in financials. These insights drive process improvements.

Common Failure Modes: How Smart People Blow Up

Even sophisticated pods make predictable mistakes with factor models. The most common is the "hidden factor bet" where alpha signals accidentally correlate with well-known factors. You think you've found an amazing arbitrage in companies with high insider ownership, but really you've just rediscovered the value factor with extra steps.

Another classic failure is ignoring market microstructure in short-horizon signals. Your backtest shows incredible Sharpe ratio on a mean-reversion signal, but it's really just capturing bid-ask bounce. The signal uses last-trade prices which ping-pong between bid and ask for illiquid names. In production, you can't capture this "alpha" without paying the spread.

Standardization errors plague production systems. Factors must be re-standardized daily to maintain comparability, but it's easy to forget this step. When exposures aren't properly standardized, your hedges don't work and your risk forecasts are fantasy.

The ownership factors create their own traps. HFOWN and PASSOWN come from quarterly filings with 45-day delays. Treating them as real-time signals leads to whipsaw as you trade on stale information. Successful pods smooth these factors and use them for risk monitoring, not signal generation.

The Competitive Edge: What Separates Winners from Losers

At top multi-strategy platforms, every pod has access to the same factor models, the same optimizers, and the same risk systems. What separates the winners who get billions in capital from the losers who get shut down?

The winners understand that factor models are tools, not oracles. They use models to understand and control unintended risks while focusing relentlessly on stock-specific alpha. They maintain disciplined processes that keep factor exposures near zero unless they have genuine edge in factor timing.

The losers either ignore factor exposures (and blow up in factor crashes) or become slaves to the model (and never take enough risk to generate returns). They confuse factor exposure with alpha, claiming credit for returns that were really just beta to momentum or value.

The Future Evolution: Where Factor Models Are Heading

Factor models continue evolving as markets change. Alternative data creates new factors: satellite imagery, credit card spending, social media sentiment. Machine learning identifies non-linear combinations of factors that traditional models miss. But the core factors like value, momentum, quality, etc. are still just as relevant as ever, and if you don't know what the factor exposures are of your portfolio, you're basically flying blind. Not only do you not fully understand the risks you're taking in terms of factor tilts and severe imbalances, but you really don't know if you're even adding any value at all, because you can't really say what your alpha is until you really understand and can decompose your betas!

The downside of all this is that Barra charges an insane amount of money for its data and risk tools. But the good news is that Barra publishes a ton of details about how its models work and how its factors are defined, and you can grab these long and dense PDFs, extract the text from them, and then ask GPT-5 Pro to replicate the model for you. Sure, you need to get access to various affordable data APIs to get the historical price data and fundamental data, but it's now absolutely possible to replicate something "close enough" to the Barra models for your own personal use. And I've done exactly that over the last 2 days (along with a bunch of other coding tasks) for internal use in the new hedge fund research system I'm developing. I've gone through many different iterations of having coding agents further polish and refine and improve the model, and I now more or less have it all working. Sorry Mr. Rosenberg, but I won't be paying your company six figures for some linear algebra code!